OpenAI has unveiled its latest innovation: GPTBot, a web crawler designed to browse the web and gather information. Marketers and webmasters should take note of this new development, especially if they wish to regulate which parts of their content GPTBot can access.

Key Points to Understand about GPTBot:

Purpose of GPTBot: OpenAI developed GPTBot to scour the internet, gathering knowledge to empower its AI functionalities, such as ChatGPT. The ultimate aim is to refine the responses to AI-driven queries or prompts.

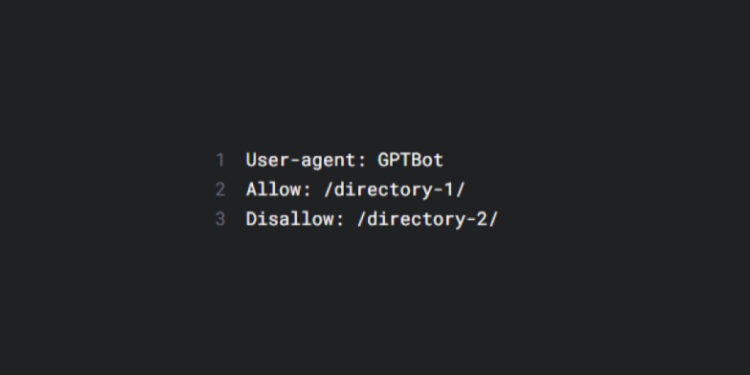

Useragent Details: The token identifying GPTBot’s User agent is straightforwardly named “GPTBot”. The complete user-agent string is articulated as: “Mozilla/5.0 AppleWebKit/537.36 (KHTML, like Gecko; compatible; GPTBot/1.0; +https://openai.com/gptbot)”.

Utilizing robots.txt: Webmasters can employ the robots.txt file to either grant or restrict GPTBot’s access. By including specific directives for “GPTBot” in your site’s robots.txt, you can specify which directories or pages GPTBot can or cannot crawl.

GPTBot’s IP Ranges: For now, OpenAI has disclosed a single IP range for GPTBot. However, there’s a likelihood that they’ll augment this list in the future.

GPTBot Documentation: OpenAI provides comprehensive documentation on GPTBot for those seeking more detailed insights.

OpenAI’s GPTBot is a noteworthy development in the realm of web crawling. As the lines between conventional search engine crawlers and AI-driven bots begin to blur, it’s crucial for marketers and webmasters to stay updated. GPTBot offers both opportunities and challenges. While it can enhance the spread of your content, it also raises concerns about content usage. By understanding and utilizing tools like robots.txt, marketers can strike a balance between visibility and control.