In a groundbreaking move, Amazon Web Services (AWS) and NVIDIA have intensified their partnership, ushering in a new era of supercomputing resources specifically tailored for generative AI.

This collaboration melds AWS’s cutting-edge cloud capabilities with NVIDIA’s innovative hardware and software, aiming to revolutionize the way generative AI applications are developed and deployed.

Central to this partnership is the introduction of NVIDIA GH200 Grace Hopper Superchips on AWS. These chips, connected via the multi-node NVLink technology, promise unparalleled computational power for cloud users. The integration with AWS’s powerful network and virtualization technologies, including the Elastic Fabric Adapter and Nitro System, paves the way for large-scale, hyper-efficient AI model training.

Adam Selipsky, CEO at AWS said “AWS and NVIDIA have collaborated for more than 13 years, beginning with the world’s first GPU cloud instance. Today, we offer the widest range of NVIDIA GPU solutions for workloads including graphics, gaming, high performance computing, machine learning, and now, generative AI. We continue to innovate with NVIDIA to make AWS the best place to run GPUs, combining next-gen NVIDIA Grace Hopper Superchips with AWS’s EFA powerful networking, EC2 UltraClusters’ hyper-scale clustering, and Nitro’s advanced virtualization capabilities.”.

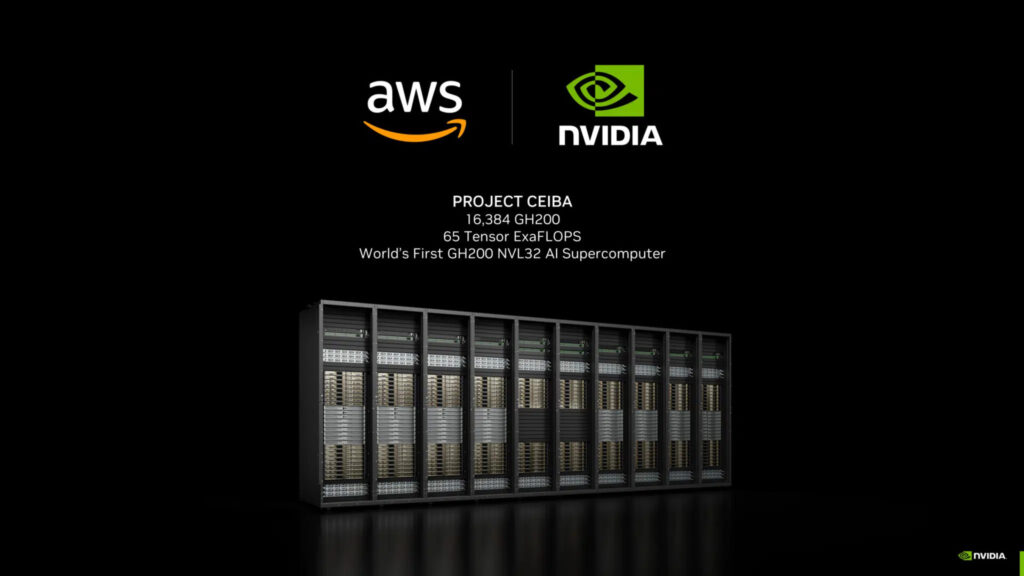

Further cementing their alliance, NVIDIA’s AI training service, DGX Cloud, will now be hosted on AWS. This service, featuring the robust GH200 NVL32, provides developers with massive shared memory capabilities, ideal for training advanced generative AI and large language models.

The partnership takes an ambitious leap with Project Ceiba, aimed at constructing the world’s fastest GPU-powered AI supercomputer. This project combines the strengths of AWS’s networking technology with NVIDIA’s GH200 NVL32, setting the stage for groundbreaking AI research and development.

Jensen Huang, Founder and CEO of NVIDIA said “Generative AI is transforming cloud workloads and putting accelerated computing at the foundation of diverse content generation. Driven by a common mission to deliver cost-effective, state-of-the-art generative AI to every customer, NVIDIA and AWS are collaborating across the entire computing stack, spanning AI infrastructure, acceleration libraries, foundation models, to generative AI services.”.

AWS also announced the launch of three new Amazon EC2 instances. The P5e instances, powered by NVIDIA’s H200 Tensor Core GPUs, are designed for advanced AI and HPC workloads. The G6 and G6e instances, equipped with NVIDIA L4 and L40S GPUs, cater to a broad range of applications from AI fine-tuning to 3D workflows.

Adding to the arsenal, NVIDIA has introduced new software on AWS to bolster generative AI development. The NVIDIA NeMo Retriever microservice and NVIDIA BioNeMo, available on Amazon SageMaker, offer enhanced tools for creating chatbots, summarization tools, and accelerating pharmaceutical research.

This collaboration is not just a technological leap but a strategic maneuver that positions AWS and NVIDIA at the forefront of generative AI innovation. The fusion of AWS’s cloud infrastructure with NVIDIA’s hardware and software expertise creates a powerhouse for developers, researchers, and businesses alike. It demonstrates a shared vision of making high-performance computing accessible and efficient for generative AI applications.

This strategic collaboration between AWS and NVIDIA marks a significant milestone in the evolution of generative AI. By combining their respective technological strengths, they are setting a new standard for AI supercomputing. This initiative is poised to unlock unprecedented possibilities in AI innovation, offering marketers and developers alike the tools to create more advanced, efficient, and scalable AI solutions.