In a strategic move to advance AI search capabilities, Perplexity recently unveiled improvements to its Copilot feature. This upgrade incorporates the fine-tuning prowess of OpenAI’s GPT-3.5 Turbo. Additionally, the announcement brought forth the introduction of Code Llama to Perplexity’s famed LLaMa Chat, a boon for developers eager to tap into Meta’s freshly released open-source large language model.

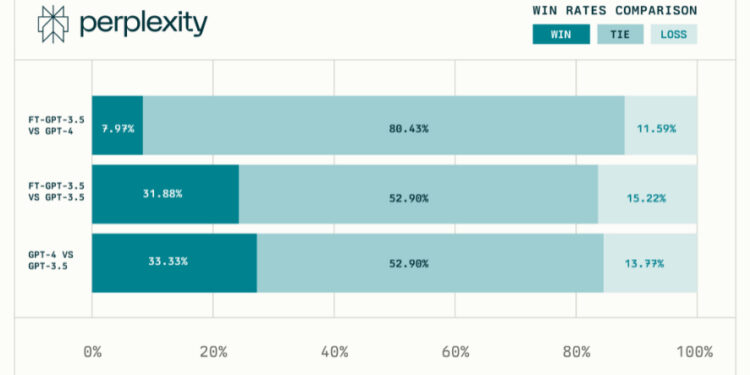

Copilot’s recent tweaks offer not just faster response times but also maintain the high-quality search output that Perplexity users have come to expect. A closer look at the changes reveals that the newly integrated GPT-3.5 model matches the performance of its GPT-4 counterpart in human ranking tasks. More than just enhancing speed, the focus remains on delivering precise answers to intricate user questions.

Perplexity has successfully slashed its model latency by an impressive 4-5 times. In practical terms, this translates to results now being served in a mere 0.65 seconds as opposed to the earlier 3.15 seconds. Such improvements are hard to miss, especially when Copilot seeks user input. But it’s not all about speed; the transition to GPT-3.5 has also brought down inference costs. Such cost-effectiveness paves the way for further innovative updates, all aimed at enriching the user experience on the platform.

Perplexity’s proactive steps towards refining its AI search signals a promising direction for the world of AI-driven tools. These enhancements, focusing on speed, precision, and cost efficiency, are likely to set new industry benchmarks. As AI platforms vie for user attention, integrating cutting-edge features and ensuring seamless user experience becomes paramount. Perplexity seems to be leading this charge, and it will be intriguing to see how competitors respond.